Stories of My Experiments with "Distroless" Containers

If you're like me, you're probably using Containers to deploy and run applications. Containers are incredibly convenient to package and run your applications, consistently, with all the necessary dependencies. However, most containers I would typically build, were built off an operating system Docker base-image. Early in my container adoption days, it was Ubuntu 14.04, which would typically result in massive images, that were nothing less than 1GB each. More recently, as the Docker community started using Alpine Linux to build minimal images, I started to use Alpine too. Alpine is essentially busybox with package manager. Alpine was much lighter than an Ubuntu, but it was still a full-fledged Operating system image

However, one thing was common in both situations. My Docker images were wayyy larger than they needed to be. That's because I was running an entire OS image, with package managers, shells, myriad utils that were completely unnecessary for my applications. My apps were typically just a Python or NodeJS app or single binary that I need running in a container. Running an entire OS, just for this, was not only overkill from a performance perspective, but from a security perspective as well.

I think the logic behind this is pretty simple. The more code you run in your application environment, the higher the chance of a vulnerability creeping into said environment. This directly correlates with the possibility of pwnage of said environment, with an attacker being to leverage existing exploits against the programs running in the OS.

This is when I found distroless from Google. Distroless images allow you to package only your application and its dependencies in a container image and run the container with a really light footprint. distroless container images come with NO package manager, shell and other programs that come with a typical OS container image, thereby not only reducing unnecessary code, but reducing attack surface with it.

Since v 17.05, Docker supports multi-stage build images. Multi-Stage builds in Docker, is an effort in composing minimal images. When building docker images, its a challenge keeping the size of the image down. Each instruction in a Dockerfile adds a layer (and code) to the docker image. Multi-Stage builds allow you to build the program in one container image, and copy over only the artifacts required from the first container image to a target image, which is what is used to run your program. Here's an example of multi-stage builds from the docker website

FROM golang:1.7.3

WORKDIR /go/src/github.com/alexellis/href-counter/

RUN go get -d -v golang.org/x/net/html

COPY app.go .

RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .

FROM alpine:latest

RUN apk --no-cache add ca-certificates

WORKDIR /root/

COPY --from=0 /go/src/github.com/alexellis/href-counter/app .

CMD ["./app"] In the above example, you'll observe that there are 2 FROM statements. In the first build image, we are downloading dependencies to run a golang program and in the second target image, we are using the artifacts from the first build image, with the --from=0 instruction, to copy the artifacts and run them in the target image.

Distroless containers leverage the same feature, where I can build my Python package in a build container image, which doesn't need to be hardened or minimal and copy all the "built" artifacts to a target container that runs the minimal distroless container with the application and its runtime dependencies.

Example

In this example, I am going to demonstrate distroless with a Python 2.7, Flask Web Application.

In the first example, I will run my Python App on an ubuntu:14.04 docker image

In the subsequent example, I will run the same Python app on a distroless python2.7 container image.

Ubuntu image

FROM ubuntu:14.04

ADD . /app

WORKDIR /app

RUN apt-get update && apt-get install -y python-pip

RUN pip install -r requirements.txt

CMD ["python", "app.py"]This is a pretty simple Dockerfile. I am using the base image ubuntu 14.04, copying files to a workdir /app, installing my dependencies and running my python program on the ubuntu 14.04 container

Distroless Image

FROM python:2.7-slim AS build-env

ADD . /app

WORKDIR /app

RUN pip install --upgrade pip

RUN pip install -r ./requirements.txt

FROM gcr.io/distroless/python2.7

COPY --from=build-env /app /app

COPY --from=build-env /usr/local/lib/python2.7/site-packages /usr/local/lib/python2.7/site-packages

WORKDIR /app

ENV PYTHONPATH=/usr/local/lib/python2.7/site-packages

CMD ["app.py"]The distroless image is a stark contrast from the Ubuntu example, where I am building my program and dependencies in the first build container image, which I am calling build-env and I am referencing the artifacts of build-env in a target, distroless container, which has been build based on the gcr.io/distroless/python2.7 image, which is meant to only run python 2.7 applications and its related dependencies. I am using Docker 18.09, where I am leveraging multi-stage builds for this.

After I built these images, I pushed them to the Docker Hub and used Clair (Container Vulnerability Scanning Tool) to scan them for vulnerabilities. In addition, I also verified results with a commercial container security scanning service, Anchore. The results were quite revealing.

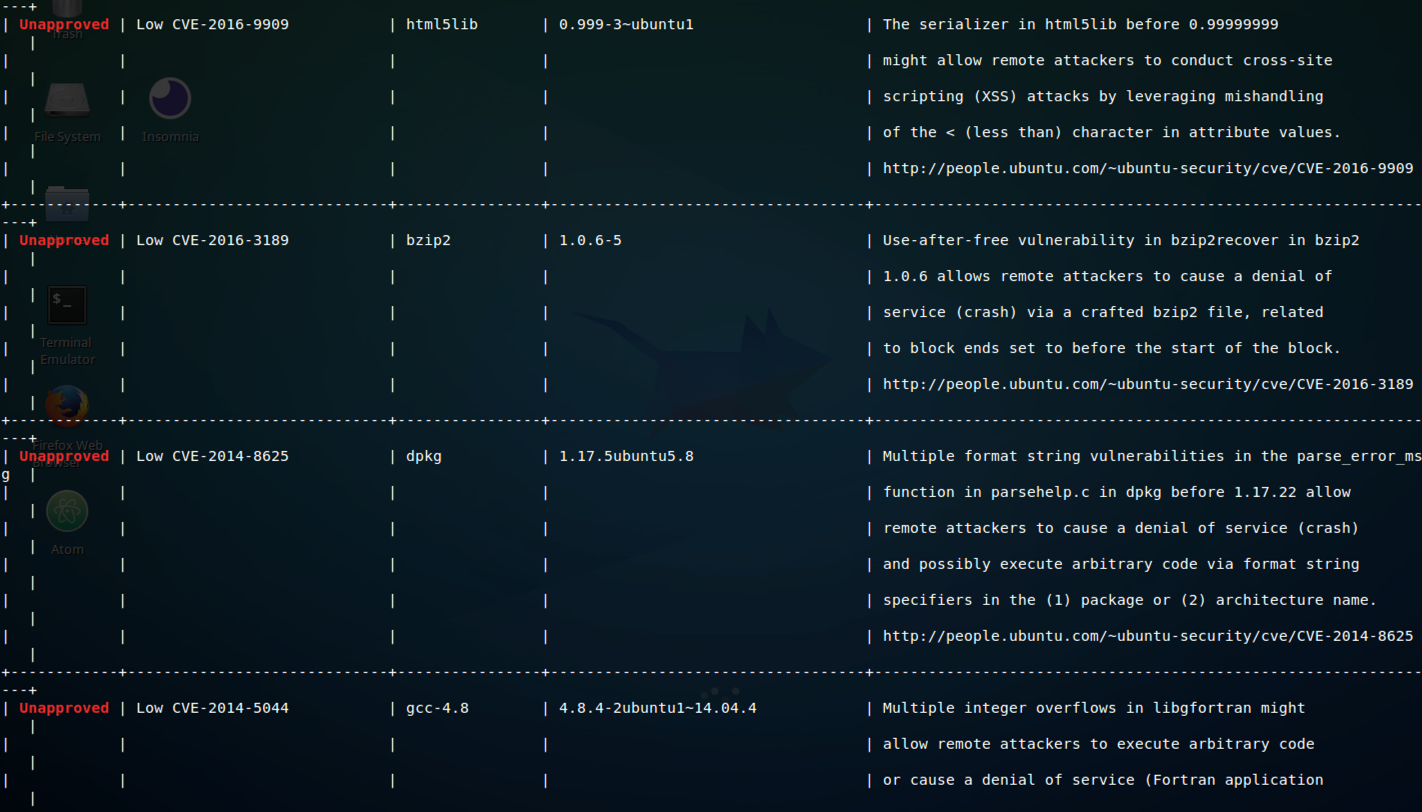

When I scanned the ubuntu 14.04 built Flask App, I found a bunch of flaws right out the gate. Some medium and some low, but all of the flaws pertaining to packages and apps that had NOTHING to do with my python application.

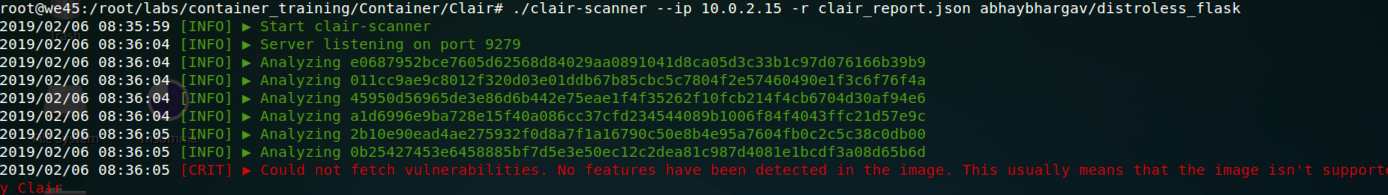

Running the same scan on the Distroless Container image gave me 0 findings both with Clair and Anchore. This is reasonably clear evidence of the fact that the distroless container didnt have any unnecessary packages that could lead to more vulnerabilities (thus exploits) being identified.

In addition, I saw that my python app in the distroless container image was 22MB and with the Ubuntu 14.04 image, was a whopping 273 MB, which is a pretty significant amount of bloat, just because I added an OS image to the mix.