Building a Static Analysis Security Bot with Gitlab

I am a big believer in Feedback loops. For me, DevSecOps is all about building better feedback loops. If I can get Dev or Ops folks to get quick security feedback on something they have written, with few/no false positives, in a workflow that they are used to, I am happy as a clam!

Which is why I really like Git-based workflows. I like these workflows because they check all of the boxes on my feedback loop list:

✅ they are close to and part of dev workflows. Developers use regularly, and understand workflows that are centered around their Git repositories

✅ Lots of Git Source Code Hosting solutions (Github, Gitlab and Bitbucket) offer automation and integration possibilities and mature APIs to work with

So I set out to build the following, for a client-project I was working on. The workflow went something like this:

- Developer commits code to a secondary branch, for example

sprintbranch - The Developer creates a Pull-Request (in the case of Gitlab, its called a

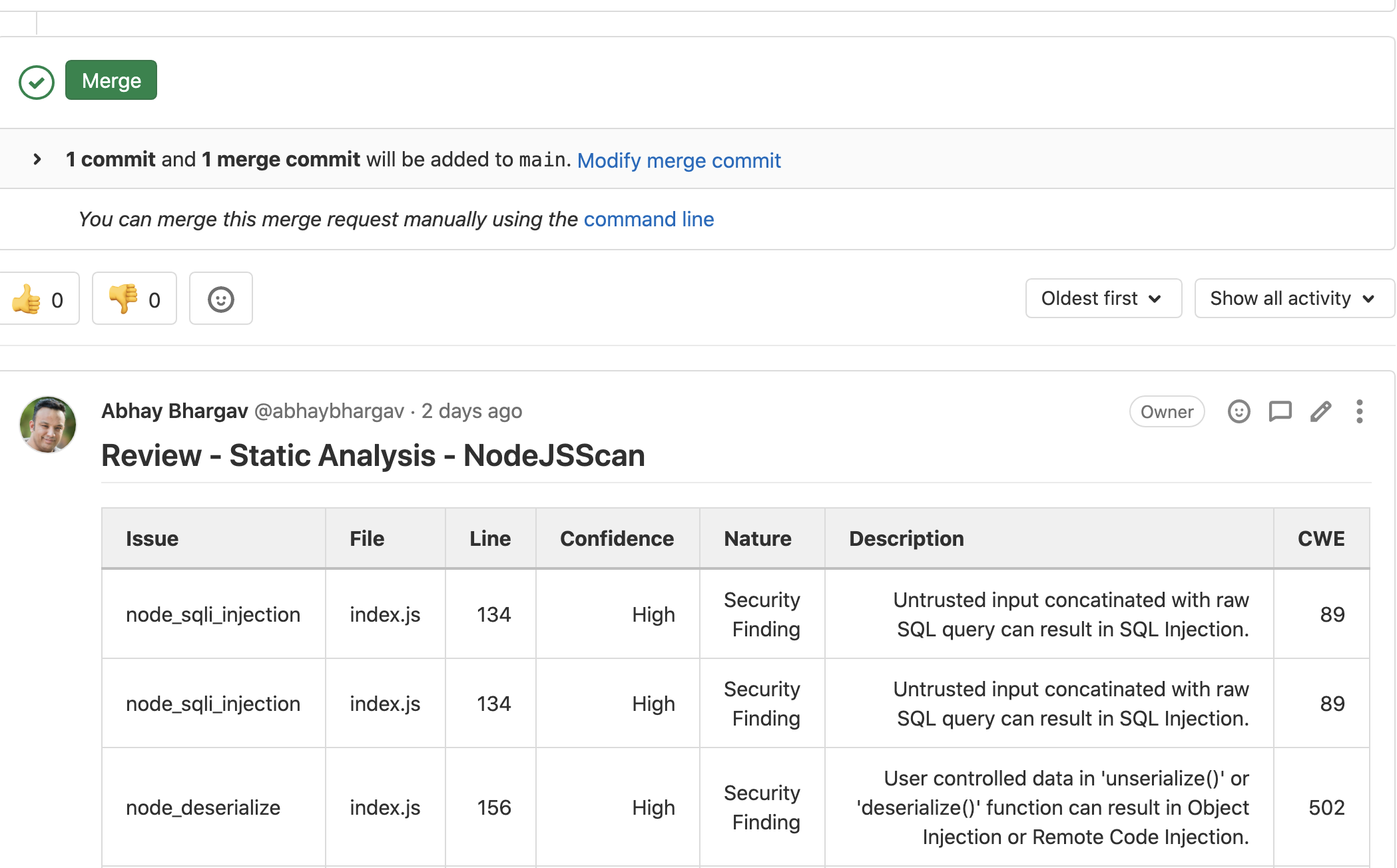

Merge Request) to merge their work into the main branch - As soon as the developer creates a Merge Request (MR), the contents of the Merge Requests (only the files edited in the Merge Request, mind you!), were to be subjected to a Security Static Analysis and written back to the MR as a Feedback Note with details of the vulnerabilities identified in the contents of the Merge Request

The idea for this was to ensure that:

- Every MR is subjected to automated security reviews with a reasonably accurate Security review (of the code)

- Only the files changed in the PR were to be scanned and checked. This was a HUGE repository and even scanning the developer's original commit branch, would be overkill and a definite waste of time.

- It would only be invoked when specific types of files were modified/added in the Pull request. In this case, it was

.jsfiles as it was a NodeJS Project. There was really no point scanning file extensions and paths that weren't of significance in this case.

Components of the Solution

With the problem statement in hand, I set out to build a quick solution for this with:

- Gitlab CI/CD => Integrated CI/CD Runner with Gitlab that runs, based on a CI workflow specified by a

.gitlab-ci.ymlfile in the root path of the source code repository - NodeJSScan with Semgrep => NodeJSScan was always a pretty reliable tool to identify security flaws against NodeJS apps. But with its new Semgrep integration, its much more accurate and incisive, in terms of identifying vulnerabilities, quickly, and more importantly, accurately.

- Gitlab's API => Gitlab's REST API that I would use to drill down into the commit, get all the files affected by the commit and then scan those files

- Custom Code => I ended up building a small python utility that would leverage Gitlab's API, NodeJSScan (which also comes with a python API) to dissect the commit for relevant files, build a list of these files, for NodeJSScan to write, and write the results of my security analysis back to the MR as a Feedback comment

Approach and Steps to Implementing a Solution

Triggering CI/CD Jobs on Merge-Requests only

Gitlab CI/CD allows you to trigger jobs whenever code is committed in the repository. This is configured by creating a .gitlab-ci.yml file in the root directory of the source repository with the specific jobs and the stages those jobs should be run as part of. For example

before_script:

- mkdir -p $GOPATH/src/$(dirname $REPO_NAME)

- ln -svf $CI_PROJECT_DIR $GOPATH/src/$REPO_NAME

- cd $GOPATH/src/$REPO_NAME

stages:

- test

- build

- deploy

format:

stage: test

script:

- go fmt $(go list ./... | grep -v /vendor/)

- go vet $(go list ./... | grep -v /vendor/)

- go test -race $(go list ./... | grep -v /vendor/)

compile:

stage: build

script:

- go build -race -ldflags "-extldflags '-static'" -o $CI_PROJECT_DIR/mybinary

artifacts:

paths:

- mybinary

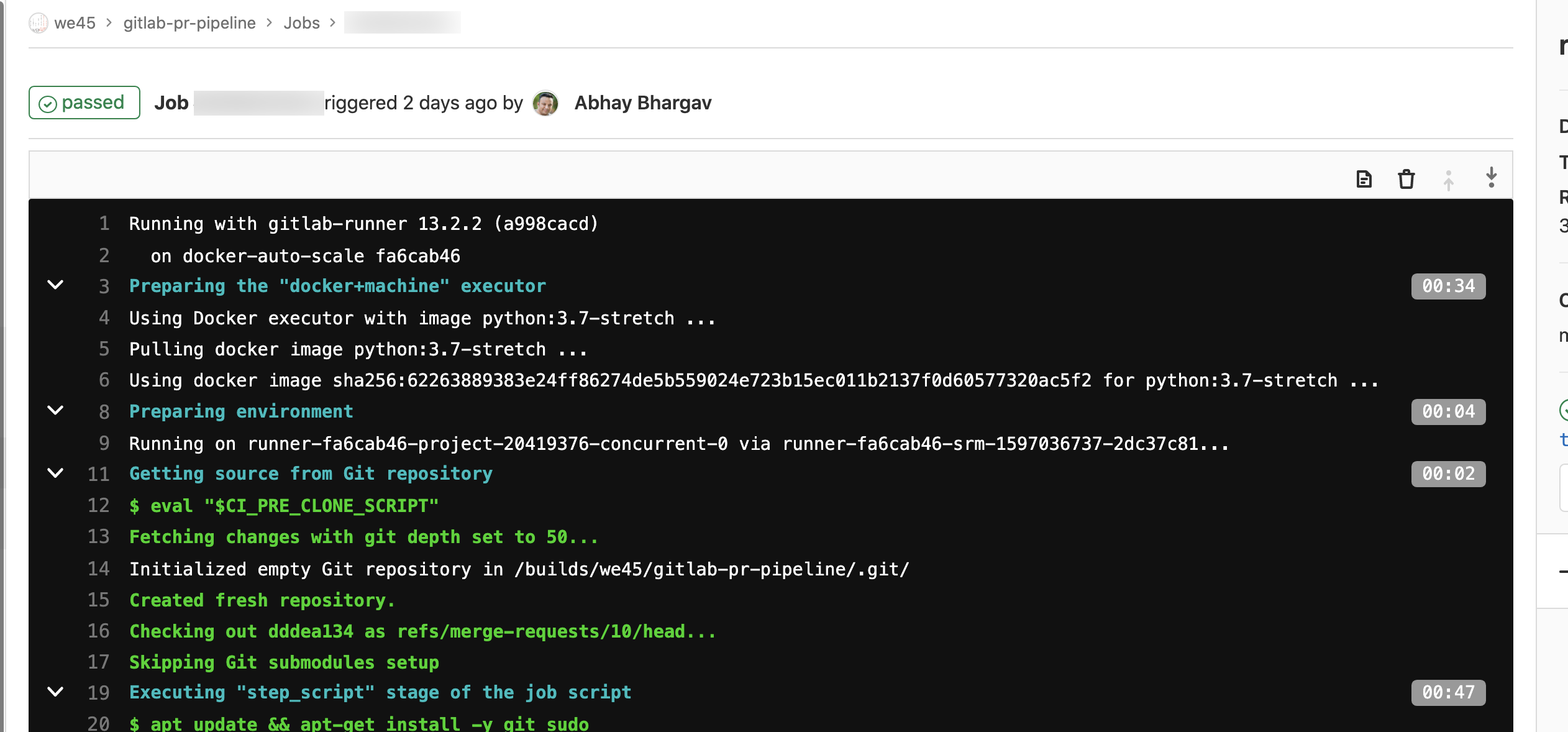

Gitlab's CI utilizes "runners" to run the jobs and you'd also need to specify the docker image that you want to run the job with. The jobs allow you to configure the sequence of the jobs and the pre and post steps of the job in the form of before_script and after_script hooks that may be triggered before the job and after the job

My Gitlab-CI file looks like this. Ill explain

stages:

- test

run_python_mr_bot:

image: python:3.7-stretch

stage: test

only:

- merge_requests

before_script:

- apt update && apt-get install -y git sudo

script:

- sudo pip install GitlabMRScanner==0.0.3

- echo "{\"branch\":\"${CI_COMMIT_REF_NAME}\", \"token\":\"$PA_TOKEN\", \"project_id\":${CI_MERGE_REQUEST_PROJECT_ID}, \"hash\":\"${CI_COMMIT_SHORT_SHA}\", \"pr_id\":${CI_MERGE_REQUEST_IID}}" > config.json

- mr-botIn the above yaml file, let's explore the configs:

- This only triggers on

merge_requestsas specified by theonlykey with a reference tomerge_requests. - The

GitlabMRScannerthat is being installed inscriptsection of the job refers to the custom code I wrote to actually sift through the commit, get the code, scan it and write results back to the MR (more on this later) - Gitlab, by default injects certain environment variables into the CI job runner for the job to access. This is a useful list of all env-vars that Gitlab injects into its CI jobs, which can be accessed by the scripts in these jobs

- Nearly all the env-vars, I have used are the pre-defined env-vars available to the CI job that I am running. In addition, the

PA_TOKENenv-var is a masked/protected env-var that is my Personal Access Token. This is a Gitlab API token that is required for me to access the contents of the Merge Requests and write results back to the merge request. - my code, namely the CLI

mr-botlooks for a file calledconfig.jsonwhich has all of these env-vars in a JSON file and uses them to run the static analysis workflow against the contents of the commit

GitlabMRScanner

While Gitlab's CI is being used to run the job, the real heavy-lifting is being done by my custom code, named GitlabMRScanner. This is a small python utility that does the following:

- Identifies all the files affected by the commit (developer's commit) to the secondary branch with Gitlab's REST API (input from the

config.jsonfile) - Reads the affected files from the branch and writes them to a local file-system, again using Gitlab's REST API

def get_pr_results(ci_token, project_id, branch, hash):

gl = gitlab.Gitlab('https://www.gitlab.com', private_token=ci_token)

project = gl.projects.get(project_id)

commit = project.commits.get(hash)

diff = commit.diff()

file_list = []

vul_list = []

for single in diff:

path = single.get('new_path')

basename = ntpath.basename(path)

if basename.endswith('.js'):

url = "https://www.gitlab.com/api/v4/projects/{}/repository/files/{}?ref={}".format(project_id, path, branch)

r = requests.get(url, headers={"PRIVATE-TOKEN": ci_token})

content = b64decode(r.json().get('content').encode()).decode()

with open(basename, 'w') as myfile:

myfile.write(content)

file_list.append(basename)

- Uses the NodeJSScan Python library

njsscanto run SAST against only the affected files and write results to a JSON dictionary

scanner = NJSScan(file_list, json=True, check_controls=False)

results = scanner.scan()

if 'nodejs' in results and results.get('nodejs'):

sast = results.get('nodejs')

for vname, vdict in sast.items():

vul_dict = {

"name": vname,

"description": vdict.get('metadata').get('description')

}

cwe = str(vdict.get('metadata').get(

'cwe')).split(":")[0].split("-")[1]

evidences = []

files = vdict.get('files')

for single_file in files:

single_evid = {

"url": single_file.get("file_path"),

"line_number": single_file.get('match_lines')[0],

"log": single_file.get('match_string')

}

evidences.append(single_evid)

vul_dict['cwe'] = int(cwe)

vul_dict['evidences'] = evidences

vul_list.append(vul_dict)- Formats the JSON into a nice little Markdown table as a Merge Request Feedback Note for the developer to review and take action against

gl = gitlab.Gitlab('https://www.gitlab.com', private_token=ci_token)

project = gl.projects.get(project_id)

mdlist = []

mr = project.mergerequests.get(mr_id)

mdh1 = "## Review - Static Analysis - NodeJSScan\n\n"

mdtable = "| Issue | File | Line | Confidence | Nature | Description | CWE |\n"

mdheader = "|-------|:----------:|------:|------:|------:|------:|------:|\n"

mdlist.append(mdh1)

mdlist.append(mdtable)

mdlist.append(mdheader)

for single in vul_dict:

name = single.get('name')

desc = single.get('description')

cwe = single.get('cwe', 0)

if 'evidences' in single and single.get('evidences'):

for single_evid in single.get('evidences'):

mdlist.append('| {} | {} | {} | {} | {} | {} | {} |\n'.format(

name,

single_evid.get('url'),

single_evid.get('line_number'),

"High",

"Security Finding",

desc,

cwe

))

final_md = "".join(mdlist)

mr.notes.create({'body': final_md})The end-result of this, looks like this, and arrives as feedback within 10 seconds of the Merge Request being created by the developer